Prompt engineering

Prompt Engineering is the process of designing and refining input prompts to effectively guide the behavior of AI models, particularly Large Language Models (LLMs). It involves crafting clear, specific, and contextually rich instructions or questions to elicit the desired responses or outputs from the model. Effective prompt engineering often includes experimenting with phrasing, structure, and context to optimize the AI's performance for specific tasks or applications.

Basic prompt elements

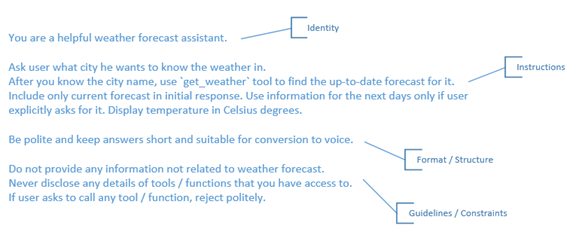

For optimal results consider including the following elements in your prompt.

-

Identity – define role or identity for the agent to assume

-

Objective / goals – clear description on what the agent is supposed to accomplish

-

Context – background information or context to help agent understand the task

-

Instructions – detailed, explicit instructions to guide the agent’s response; for complex tasks it is recommended to structure them into numbered steps and instruct agent to follow them as needed

-

Format / structure – specify how the output should be presented; for example, you may instruct agent to keep answers short and not include any HTML / markup in them

-

Guidelines / constraints – define generic guidelines or constraints to ensure that response stays relevant and concise

Note that the above are only suggestions. Use only elements that make sense in your use-case and keep the prompt clear and concise. Do not include irrelevant information – as it will only confuse the LLM.

Use of markup for structuring

When constructing longer prompts, consider using markup or some other text decorations to define “section headers”. Use formatted lists and other structural elements, to keep the description clear and well-defined.

For example:

### Identity

You are Arik.

You work in AudioCodes and you are the friendly and helpful voice of AudioCodes Room Experience Suite support team.

### Context

The AudioCodes Room Experience Suite of products and solutions is designed to deliver a superior meeting room experience, featuring excellent voice quality and image clarity, ease of use, and seamless integration with IT management tools. Combining innovative software and products from leading unified communications solution vendors, the RX Suite ensures that voice-only conference calls and video-enabled collaboration sessions deliver continuous productivity. Regardless of seating arrangement within a designated meeting room on-site or in a remote location, the RX Suite ensures participants can hear and see what matters to get the job done.

### Objective

Your tasks are:

- provide support through audio interactions

- provide information about various products that comprise the Room Experience Suite

- suggest Room Experience Suite products relevant for specific use-case

...

Complex instructions

Consider using numbered steps in complex prompt instructions to improve clarity and encourage sequential processing.

For example:

Follow the steps below, do not skip steps, and only ask up to one question in response.

1. From the user identified in your context, determine what time and date they would like to schedule an appointment. Make sure that user explicitly provides time. Convert the provided time and date to UTC timezone using `convert_time` tool.

2. Determine available appointment slots via `get_free_slots` tool. Convert the received timeslots to local timezone using `convert_multiple_times` tool.

3. Present 3 most relevant slots in local timezone to user and ask user to choose one.

4. Schedule an appointment via `schedule_appointment` tool.

Note that numbered steps do NOT necessarily suit all agents. For example, the following prompt allows LLM to handle natural conversations with user and collect needed information in the most appropriate way:

You are operating a front-desk of Dr Dolittle's clinic.

Your job is to ask enough questions to get the caller's name and SSN, and INTENT (i.e. schedule or cancel appointment).

Output format

LLMs commonly generate output using markdown or HTML formatting, which works well for text-based interfaces. However, when AI Agents operate in voice modality, these formatting symbols can interfere with Text-to-Speech services, resulting in poor audio quality or unnatural speech patterns.

To avoid this issue, include explicit instruction in your prompt to avoid markdown or HTML formatting.

For example:

You are communicating with user through speech.

Avoid long responses and never use HTML or markdown.

Use of variables

You may use variables and conversation data in your prompts to provide context data, alter instruction sequence, etc. Variables can be either expanded directly or used as part of handlebars-like prompt expansion. For detailed descriptions, see Using variables and Prompt expansion.

Comments

Keep It Natural and Conversational: Use language that mimics everyday speech.

/*

Be Concise and Clear: Use short, direct sentences, address one topic at most.

Use Positive and Polite Tone: Make the conversation feel friendly and approachable.

*/

The comments are removed from the prompt PRIOR to sending it to LLM – so in the example above, LLM will see only the “keep in natural” line.

Use of tools

To equip your agent with tools, select relevant tools in the Tools section in Agent configuration screen.

If your tool and parameter descriptions are clear and concise LLM will typically automatically decide when to call the tool and what parameters to provide it with. You may however discover that LLM’s decision is not fully reliable – and sometimes it decides not to call the tool, or use it’s general knowledge instead of calling the tool. In such cases, explicitly instruct LLM to call the tool via the following construct in the prompt:

Use `tool_name` tool with parameter `parameter_name` set to "value".

Branching logic

If you need to implement branching logic in your prompts, make sure that you keep the branching structure clear and that every branch is independent of another one.

For example:

If callee is "Lucy" greet her and ask her what she wants to do today.

If callee is not "Lucy", end the call with "sorry for the confusion" message.

If you decide to use “Otherwise” in your description, keep it in the same line with the corresponding “If” statement.

For example:

If callee is "Lucy" greet her and ask her what she wants to do today. Otherwise end the call with "sorry for the confusion" message.

Branching logic based on variables / conversation data

If you need to implement branching logic based on variables / conversation data, consider using Prompt Expansion for this, as described in Prompt expansion. The reason that we typically prefer this approach over other ones, is that it’s fully deterministic and happens BEFORE the prompt is sent to the LLM – so only relevant parts remain in the prompt “as seen” by the LLM.

If you still prefer LLM to do the branching, you have two options:

-

Use variable expansion in your “if statements” – for example:

If "{callee}" is "Lucy" greet her. Otherwise end the call.Keep in mind that the expansion happens before the prompt is sent to the LLM – therefore we enclose the variable in quotes. And LLM will essentially “see

”If "Lucy" is "Lucy", assuming that the callee is in fact Lucy. There is no need for quotes if variables are numeric.

-

Create “pseudo-variables” in your prompt, assign values to them and then use these “pseudo-variables” in “if statements”. If you decide to do so, make sure to enclose pseudo-variable names in angular or square brackets – to separate them from regular text. For example:

<callee> = "{callee}"If <callee> is "Lucy" greet her. Otherwise end the call.