Text-to-speech service information

To connect VoiceAI Connect Enterprise to a text-to-speech service provider, certain information is required from the provider, which is then used in the VoiceAI Connect Enterprise configuration for the bot.

Microsoft Azure Speech Services

Connectivity

To connect to Azure's Speech Service, you need to provide AudioCodes with your subscription key for the service. To obtain the key, see Azure's documentation.

The key is configured on VoiceAI Connect Enterprise using the credentials > key parameter in the providers section.

Note: The key is valid only for a specific region. The region is configured using the region parameter.

Language Definition

To define the language, you need to provide AudioCodes with the following from Azure's Text-to-Speech table:

-

'Locale' (language)

-

'Voice name' (gender based)

The 'Local' and 'Voice name' values are configured on VoiceAI Connect Enterprise using the language and voiceName parameters, respectively. For example, for Italian, the language parameter should be configured to it-IT and the voiceName parameter to it-IT-ElsaNeural.

Customized neural voice

If you have defined a customized synthetic voice with Azure's Custom Neural Voice feature, you need to configure VoiceAI Connect Enterprise using the ttsDeploymentId parameter to identify the associated speech-to-text endpoint.

For VoiceAI Connect Enterprise, this feature is supported only from Version 2.6 and later

Custom subdomain names for Azure Cognitive Services

Azure Cognitive Services provides a layered security model. This model enables you to secure your Cognitive Services accounts to a specific subset of networks. For more details, click here.

This section describes how to use custom subdomain and limit network access with VoiceAI Connect Enterprise.

Creating Custom Domain

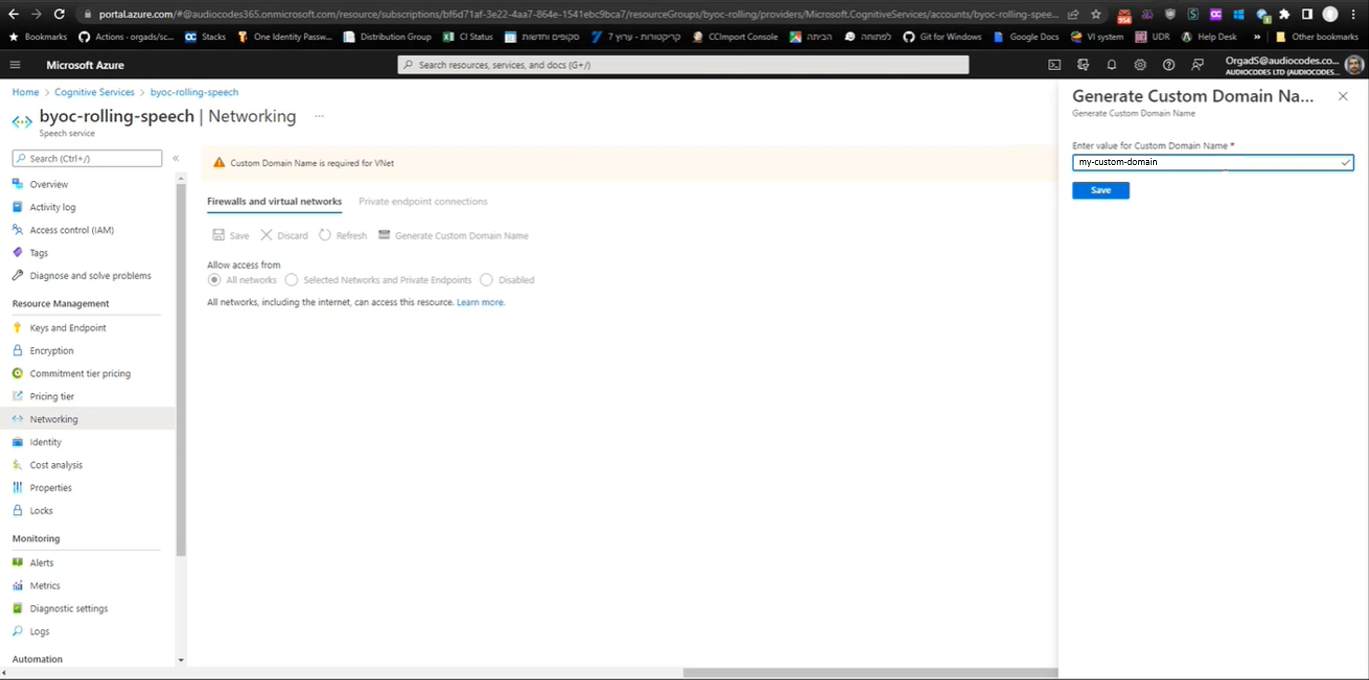

1. In Microsoft Azure’s Speech Services > [service name], select Networking (under Resource Management).

2. Click Generate Custom Domain Name.

3. Type in a custom domain name.

4. Click Save.

The custom domain name will also appear in Microsoft Azure’s Cognitive Services > Speech service.

Allowing only Selected Networks

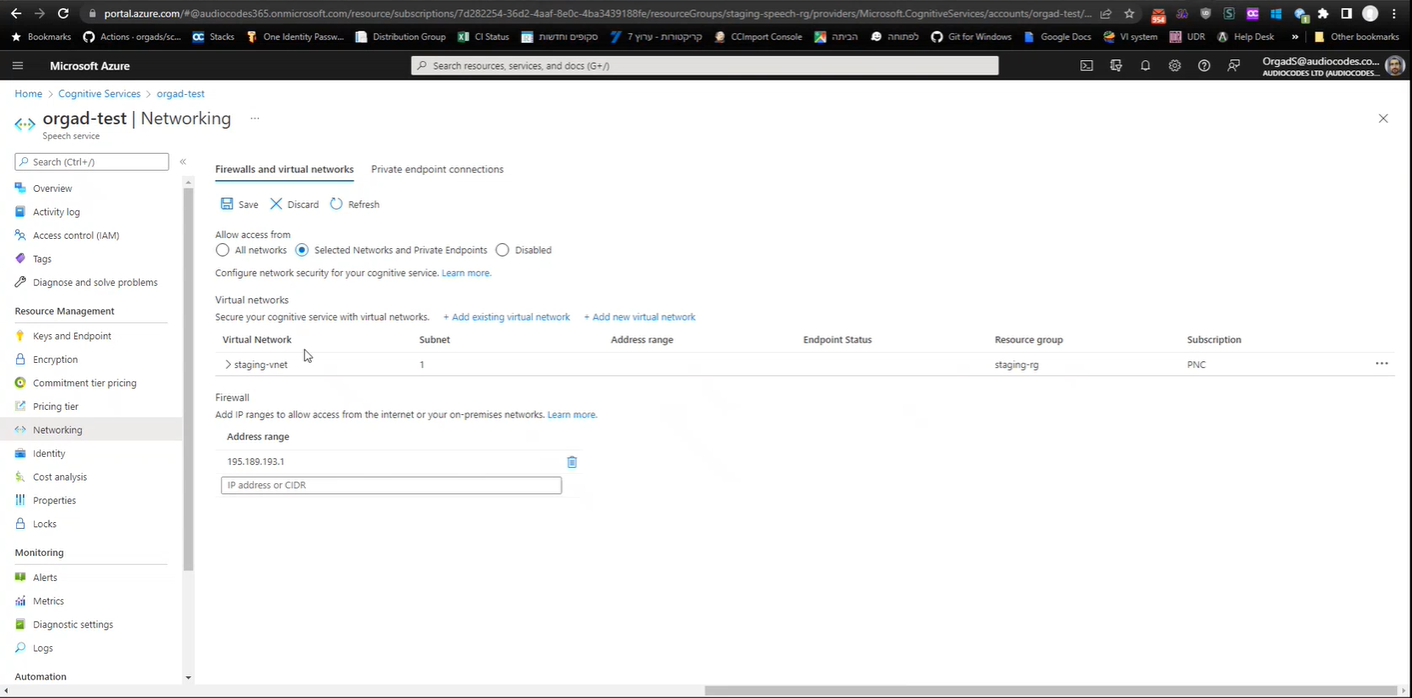

1. In Microsoft Azure’s Cognitive Services > Speech service., select the service name you created.

2. Click Networking (under Resource Management).

3. If not already selected, select Firewalls and virtual networks tab.

4. Select Selected Networks and Private Endpointss.

5. Add virtual networks, or external IP addresses.

Setting up Private endpoint connections

1. In Microsoft Azure’s Cognitive Services > Speech service, select the custom domain you created.

2. Click Networking (under Resource Management).

3. Select Private endpoint connections tab and create a private endpoint. For more details, click here.

4. In the provider, configure azureCustomSubdomain to the domain name (not FQDN, only the name) and set azureIsPrivateEndpoint to true.

ParameterazureIsPrivateEndpointwas deprecated in version 3.10.1.

Google Cloud Text-to-Speech

Connectivity

To connect to Google Cloud Text-to-Speech service, you need to provide AudioCodes with the following:

-

Private key of the Google service account. To create the account key, refer to Google's documentation. From the JSON object representing the key, you need to extract the private key (including the "-----BEGIN PRIVATE KEY-----" prefix) and the service account email.

-

Client email

Configuration

The keys are configured on VoiceAI Connect Enterprise using the privateKey and clientEmail parameters in the providers > credentials section. To create the account key, refer to Google's documentation. From the JSON object representing the key, extract the private key (including the "-----BEGIN PRIVATE KEY-----" prefix) and the service account email. These two values must be configured on VoiceAI Connect Enterprise using the privateKey and clientEmail parameters.

Language Definition

To define the language, you need to provide AudioCodes with the following from Google's Supported voices and languages table:

-

'Language code' (language)

-

'Voice name' (gender based)

The 'Language code' and 'Voice name' values are configured on VoiceAI Connect Enterprise using the language and voiceName parameters, respectively. For example, for English (US), the language parameter should be configured to en-US and the voiceName parameter to en-US-Wavenet-A.

AWS Amazon Polly

Connectivity

To connect to Amazon Polly Text-to-Speech service, see Text-to-speech service information for required information.

Language Definition

To define the language, you need to provide AudioCodes with the following information from Voices in Amazon Polly table:

-

'Language'

-

'Name/ID' (gender based)

-

Support (Yes or No) for 'Neural Voice' or 'Standard Voice'

The 'Language' and 'Name/ID' values are configured on VoiceAI Connect Enterprise using the language and voiceName parameters, respectively. For example, for English (US), the language parameter should be configured to English, US (en-US) and the voiceName parameter to Matthew.

The usage of 'Neural Voice' or 'Standard Voice' is configured on VoiceAI Connect Enterprise using the ttsEnhancedVoice parameter. Refer to the Voices in Amazon Polly table to check if the specific language voice supports Neural Voice and/or Standard Voice.

ttsEnhancedVoice parameter (neural voices) is supported from Version 3.0 and later.Nuance

Connectivity

To connect VoiceAI Connect Enterprise to Nuance Vocalizer for Cloud (NVC) speech service, it can use the WebSocket API or the open source Remote Procedure Calls (gRPC) API. To connect to Nuance Mix, it must use the gRPC API.

VoiceAI Connect Enterprise is configured to connect to the specific Nuance API type, by setting the type parameter in the providers section, to nuance or nuance-grpc.

You need to provide AudioCodes with the URL of your Nuance's text-to-speech endpoint instance. This URL (with port number) is configured on the VoiceAI Connect Enterprise using the ttsHost parameter.

Note: Nuance offers a cloud service (Nuance Mix) as well as an option to install an on-premise server. The on-premise server is without authentication while the cloud service uses OAuth 2.0 authentication (see below).

VoiceAI Connect Enterprise supports Nuance Mix, Nuance Conversational AI services (gRPC) API interfaces. VoiceAI Connect Enterprise authenticates itself with Nuance Mix (which is located in the public cloud), using OAuth 2.0. To configure OAuth 2.0, use the following providers parameters: oauthTokenUrl, credentials > oauthClientId, and credentials > oauthClientSecret.

Nuance Mix is supported only by VoiceAI Connect Enterprise from Version 2.6 and later.

Language Definition

To define the language, you need to provide AudioCodes with the following from Nuance's Vocalizer Language Availability table:

-

'Language Code' (language)

-

'Voice' (gender)

The 'Language' and 'Voice' values are configured on VoiceAI Connect Enterprise using the language and voiceName parameters, respectively. For example, for English (US), the language parameter should be configured to en-US and the voiceName parameter to Kate.

-

Nuance Vocalizer for Cloud

To define the language, you need to provide AudioCodes with the language code from Nuance.

This value (ISO 639-1 format) is configured on VoiceAI Connect Enterprise using the language parameter. For example, for English (USA), the parameter should be configured to en-US.

ReadSpeaker

Connectivity

To connect to ReadSpeaker Text-to-Speech service, enter the information provided by your ReadSpeaker account manager upon delivery of the service.

For authentication, enter the entire string of the authorization key as provided by ReadSpeaker. This value is a combination of both your ReadSpeaker account ID and a private key (e.g., "1234.abcdefghijklmnopqrstuvxuz1234567"). This value should be configured in VoiceAI Connect Enterprise using the credentials > key parameter under the providers section.

To request a new key, contact the ReadSpeaker support team or your ReadSpeaker account manager.

The endpoint value to use in your AudioCodes implementation is provided by the ReadSpeaker team.

Language Definition

To define the language and voice, enter the language and voice values as provided by ReadSpeaker.

For language and voice, the following needs to be defined:

-

'Language code' (language)

-

'Voice'

The 'Language' and 'Voice' values are configured on VoiceAI Connect Enterprise using the language and voiceName parameters, respectively. For example: for English (US) using the voice Paul, the language parameter should be configured to English, US (en-US), and the voiceName parameter to Paul.

Yandex

To connect to Yandex, contact AudioCodes for information.

ElevenLabs

For VoiceAI Connect Enterprise, this feature is supported only from Version 3.22 and later

Connectivity

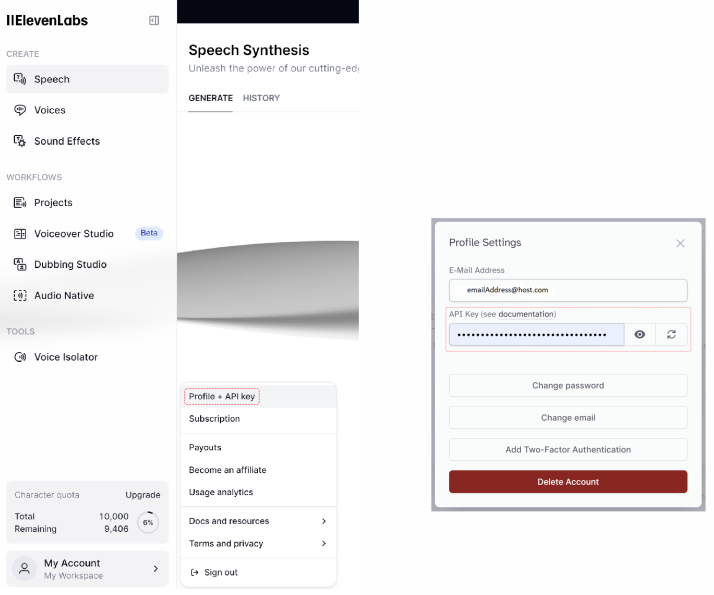

To connect VoiceAI Connect Enterprise to ElevenLabs text-to-speech service, you need to provide AudioCodes with the following from ElevenLabs:

-

API key name: The key can be obtained in the Profile Settings dialog box in ElevenLabs management interface:

This key must be configured on VoiceAI Connect Enterprise using the credentials > key parameter under the providers section.

The provider type under the providers section must be configured to elevenlabs.

For example:

{

"name": "my_elevenlabs",

"type": "elevenlabs",

"credentials": {

"key": "api key from elevenlabs"

}

}

Text-to-Speech (TTS) URL Configuration

The default ElevenLabs TTS URL is: https://{host}/v1/text-to-speech/{voice_id}/stream

-

The default value for {host} is api.elevenlabs.io.

-

You can change this host by setting the provider

ttsHostparameter. -

The {voice_id} value is determined by the bot’s

voiceNameparameter. -

If no

voiceNameis specified, the system uses the default Rachel (English) voice, which corresponds to voice ID 21m00Tcm4TlvDq8ikWAM in ElevenLabs.

You can also override the entire URL by using the provider ttsUrl parameter.

In this case, you can either specify a fixed voice ID directly in the URL or keep the {voice_id} placeholder, which will automatically be replaced by the configured bot voiceName parameter.

Language Definition

To define the language and voice, enter the language and voice values as provided by ElevenLabs.

-

voice-id: Obtain this value from ElevenLabs (refer to their documentation Fetching the voice-id). VoiceAI Connect Enterprise's default voice-id is "21m00Tcm4TlvDq8ikWAM".

-

model-id: (Optional) Use ElevenLabs model-id models.

The 'voice-id' value is configured on VoiceAI Connect Enterprise using the voiceName parameter.

The 'model-id' is configured on VoiceAI Connect Enterprise using the ttsModel parameter.

Training Data Option

Some speech providers offer an option to retain customer data for the purpose of improving their speech models.

Setting the allowTrainingData dynamic bot parameter to true for a bot enables this option for the TTS and/or STT service provider, allowing retention of the customer's data.

The default value is false.

Advanced Parameters

ElevenLabs advanced parameters can be added in the provider section. For a list of the advanced parameters, refer to ElevenLabs documentation.

-

Parameters listed under 'Query Parameters' in ElevenLabs documentation should be under the

querysection parameters. -

Parameters listed under 'Body' in ElevenLabs documentation should be under the

bodysection.

Example of advanced configuration:

{

"ttsOverrideConfig": {

"query": {

"optimize_streaming_latency": 2

},

"body": {

"voiceSettings": {

"stability": 3

}

}

}

}

Azure OpenAI

For VoiceAI Connect Enterprise, this feature is supported only from Version 3.24.2 and later

VoiceAI Connect Enterprise now supports a custom speech service for text-to-speech (TTS) using Azure OpenAI. This allows you to use Azure-hosted OpenAI TTS models, such as gpt-4o-mini-tts, for synthesizing speech responses.

Connectivity

To connect VoiceAI Connect Enterprise to the Azure OpenAI text-to-speech service, you must create an OpenAI resource in Azure and deploy a TTS model. (for more information, see here).

Configure the parameters below using values from the Azure OpenAI resource.

How to use it?

This service is configured at the provider level by specifying the following parameters in the speechProviders section of the configuration file.

|

Parameter |

Type |

Description |

|---|---|---|

|

String |

Describes the endpoint of the Azure OpenAI service. It can be found either in the Azure OpenAI resource under Resource Management → Keys and Endpoint, or in the deployment details in Azure AI Foundry. |

|

|

String |

This parameter was deprecated in Version 3.24.5. Use the

ttsAzureOpenAIDeployment parameter instead.Describes the deployment name for the Azure OpenAI service. It can be found in the deployment details in Azure AI Foundry. Example: "gpt-4o-mini-tts". |

|

|

String |

Describes the deployment name for the Azure OpenAI tts service. It can be found in the deployment details in Azure AI Foundry. Example: "gpt-4o-mini-tts". For VoiceAI Connect Enterprise, this feature is supported only from Version 3.24.5 and later.

|

These parameters are applicable only when type is set to "azure-openai" in the provider configuration.

speechProviders Configuration

The speechProviders array includes configuration blocks for each speech provider. To use Azure OpenAI for text-to-speech, set the type to "azure-openai" and include the mandatory parameters as shown below.

Example Configuration:

{

"name": "AzureOpenAI TTS <abc12345>",

"type": "azure-openai",

"credentials": {

"key": "api key from Azure OpenAI Resource"

},

"azureOpenAIEndpoint": "https://your-resource-name.openai.azure.com/",

"ttsAzureOpenAIDeployment": "gpt-4o-mini-tts",

"azureOpenAIAPIVersion": "2025-03-01-preview"

}

Note: The Azure OpenAI provider supports both text-to-speech (TTS) and speech-to-text (STT). However, these functions require two separate Azure OpenAI provider configurations — one for TTS and one for STT..

Deepgram

Connectivity

To connect VoiceAI Connect Enterprise with Deepgram's text-to-speech service, you need to provide AudioCodes with the following:

-

Deepgram API key: You can obtain an API key by either signing up for an account at Deepgram’s website, or by contacting Deepgram’s sales team.

This key must be configured on VoiceAI Connect Enterprise using the credentials > key parameter under the providers section.

The provider type under the providers section must be configured to deepgram.

For example:

{

"name": "my_deepgram",

"type": "deepgram",

"credentials": {

"key": "API key from Deepgram"

}

}

The default URL to Deepgram's API is api.deepgram.com. However, you can override this URL using the ttsHost parameter.

Voice Definition

Deepgram offers various text-to-speech voices, as listed here under the Values column (for example, "aura-asteria-en" for an English US female voice). This is configured on VoiceAI Connect Enterprise using the voiceName parameter.

Training Data Option

Some speech providers offer discounts on their services if the customer agrees to allow the provider to use and possibly retain their data to help train the provider's models.

Setting the allowTrainingData dynamic bot parameter to true for a bot enables this option for the TTS and/or STT service provider, allowing retention of the customer's data.

The default value is false.

Connecting Deepgram using AudioCodes Live Hub

If you want to connect to Deepgram's speech services using AudioCodes Live Hub:

-

Sign into the Live Hub portal.

-

From the Navigation Menu pane, click Speech Services.

-

Click Add new speech service button, and then do the following:

-

In the 'Speech service name' field, type a name for the speech service.

-

Select only the Text to Speech check box.

-

Select the Generic provider option.

-

Click Next.

-

-

In the 'Authentication Key' field, enter the token supplied by Deepgram.

-

In the 'Text to Speech (TTS) URL' field, enter the URL supplied by Deepgram.

-

Click Create.

Almagu

Connectivity

To connect to Almagu, contact AudioCodes for information.

Language Definition

To define the language, you need to provide AudioCodes with the following from Almagu documentation:

-

'Voice'

The 'Voice' value is configured on VoiceAI Connect Enterprise using the language parameter.

Cartesia

Connectivity

VoiceAI Connect Enterprise supports the Cartesia text-to-speech (TTS) provider.

To connect VoiceAI Connect Enterprise to Cartesia, you must obtain an API key from Cartesia and configure it in the provider credentials (see Cartesia documentation).

The API key must be configured using the credentials > key parameter under the provider configuration.

Default model and voice

The default Cartesia TTS model is sonic-2. You can override the default model using the ttsModel parameter. You can retrieve available voices by querying the Cartesia Voices API https://api.cartesia.ai/voices/ (see Cartesia's documentation).

If the Administrator doesn't configure a voice name using the voiceName parameter, Ethan is used as the default voice.

Provider configuration

This service is configured at the provider level by specifying the Cartesia provider in the speechProviders section of the configuration file.

The provider type must be set to cartesia.

Example configuration

{

"error": {

"message": "Targets creation failed. Add new targets - allowed all the time as long as campaign not ended."

}

}

Streaming support

Cartesia supports text-to-speech streaming using WebSocket.

Cartesia is supported only by VoiceAI Connect Enterprise from Version 3.24.7 and later.