Speech-to-text service information

To connect VoiceAI Connect to a speech-to-text service provider, certain information is required from the provider, which is then used in the VoiceAI Connect configuration for the bot.

Microsoft Azure Speech Services

Connectivity

To connect to Azure's Speech Service, you need to provide AudioCodes with your subscription key for the service. To obtain the key, see Azure's documentation at https://docs.microsoft.com/en-us/azure/cognitive-services/speech-service/get-started.

The key is configured on VoiceAI Connect using the credentials > key parameter in the providers section.

Note: The key is only valid for a specific region. The region is configured using the region parameter.

Language Definition

To define the language, you need to provide AudioCodes with the following from Azure's Speech-to-text table:

-

'Locale' (language)

This value is configured on VoiceAI Connect using the language parameter. For example, for Italian, the parameter should be configured to it-IT.

VoiceAI Connect can also use Azure's Custom Speech service. For more information, see Azure's documentation at https://docs.microsoft.com/en-us/azure/cognitive-services/speech-service/how-to-custom-speech-deploy-model. If you use this service, you need to provide AudioCodes with the custom endpoint details.

Google Cloud Speech-to-Text

Connectivity

To connect to Google Cloud Speech-to-Text service, you need to provide AudioCodes with the following:

-

Private key of the Google service account. To create the account key, refer to Google's documentation. From the JSON object representing the key, you need to extract the private key (including the "-----BEGIN PRIVATE KEY-----" prefix) and the service account email.

-

Client email

Configuration

The keys are configured on VoiceAI Connect using the privateKey and clientEmail parameters in the providers > credentials section. To create the account key, refer to Google's documentation. From the JSON object representing the key, extract the private key (including the "-----BEGIN PRIVATE KEY-----" prefix) and the service account email. These two values must be configured on VoiceAI Connect using the privateKey and clientEmail parameters.

API Version

To choose the API version for the Speech-to-Text service, configure thegoogleSttVersion parameter. This parameter is configured per bot by the Administrator, or dynamically by the bot during conversation:

Google Cloud Speech-to-Text V2 Recognizers

Speech-to-Text V2 supports a Google Cloud resource called recognizers. Recognizers represent stored and reusable recognition configuration. You can use them to logically group together transcriptions or traffic for your application. For more information, refer to Google's documentation on Recognizers.

The following parameter defines the Recognizer ID and is configured per bot by the Administrator, or dynamically by the bot during conversation:

Language Definition

To define the language, you need to provide AudioCodes with the following from Google's Cloud Speech-to-Text table:

-

'languageCode'

This value is configured on VoiceAI Connect using the language parameter. For example, for English (South Africa), the parameter should be configured to en-ZA.

Nuance

Connectivity

To connect VoiceAI Connect to this Nuance Krypton speech service, it can use the WebSocket API or the open source Remote Procedure Calls (gRPC) API. To connect to Nuance Mix, it must use the gRPC API.

VoiceAI Connect is configured to connect to the specific Nuance API type, by setting the type parameter in the providers section, to nuance or nuance-grpc.

You need to provide AudioCodes with the URL of your Nuance's speech-to-text endpoint instance. This URL (with port number) is configured on the VoiceAI Connect using the sttHost parameter.

Note: Nuance offers a cloud service (Nuance Mix) as well as an option to install an on-premise server. The on-premise server is without authentication while the cloud service uses OAuth 2.0 authentication (see below).

VoiceAI Connect supports Nuance Mix, Nuance Conversational AI services (gRPC) API interfaces. VoiceAI Connect authenticates itself with Nuance Mix (which is located in the public cloud), using OAuth 2.0. To configure OAuth 2.0, use the following providers parameters: oauthTokenUrl, credentials > oauthClientId, and credentials > oauthClientSecret.

Nuance Mix is supported only by VoiceAI Connect Enterprise Version 2.6 and later.

Language Definition

-

Nuance Vocalizer: To define the language, you need to provide AudioCodes with the following from Nuance's Vocalizer Language Availability table:

-

'Language Code'

-

This value (ISO 639-1 format) is configured on VoiceAI Connect using the language parameter. For example, for English (USA), the parameter should be configured to en-US.

-

Nuance Krypton (Ver. 4.5) WebSocket-based API: To define the language, you need to provide AudioCodes with the language code from Nuance. This value (ISO 639-3 format) is configured on VoiceAI Connect using the

languageparameter under thebotssection. For example, for English (USA), the parameter should be configured toeng-USA.

Amazon Transcribe Speech-to-Text Service

To connect to Amazon Transcribe speech-to-text service, you need to provide AudioCodes with the following:

-

An account with admin permissions to use the transcribe feature (see here).

-

AWS account keys:

-

Access key

-

Secret access key

-

AWS Region (e.g., "us-west-2")

VoiceAI Connect is configured to connect to Amazon Transcribe, by setting the type parameter to aws under the providers section.

For languages supported by Amazon Transcribe, click here. The language value is configured on VoiceAI Connect using the language parameter under the bots section.

Amazon transcribe is supported only by VoiceAI Connect Enterprise Version 3.4 and later.

AmiVoice

To define the AmiVoice speech-to-text engine at the VoiceAI Connect, you need to provide AudioCodes with the following from AmiVoice's customer service:

-

Provider Type: Set provider's

typeparameter to "amivoice". -

Voice Recognition API Endpoint: This is the address of the AmiVoice speech-to-text engine and is configured on VoiceAI Connect using the

sttUrlparameter of the provider configuration. For example: ws://mrcp3.amivoicethai.com:14800. -

Connection Engine Name: This is the desired type of speech-to-text engine being used and is configured on VoiceAI Connect using the

folderIdparameter of the provider configuration. For example: AmiBiLstmThaiTelephony8k_GLM.

-

App Key: This is the credential key for connecting to the AmiVoice engine and is configured using the

tokenparameter in thecredentialssection of the provider configuration.

AmiVoice is supported only by VoiceAI Connect Enterprise Version 2.8 and later.

AudioCodes LVCSR

Connectivity

To connect VoiceAI Connect to the AudioCodes LVCSR service, set the type parameter in the providers section, to "audiocodes-lvcsr".

You must provide AudioCodes with the URL of your AudioCodes LVCSR’s speech-to-text endpoint instance. This URL (<address>:<port>) is configured on the VoiceAI Connect using the sttHost parameter.

Alternatively, you can configure it using the sttUrl parameter, which also supports secure connections. For example:

wss://<sttHost>/api/v1/speech:recognizeLVCSR

The key (optional) is used in the query parameter of the URL of the WebSocket (if not provided, the key “1” is used).

AudioCodes LVCSR speech-to-text provider is supported only by VoiceAI Connect Enterprise Version 3.0 and later.

For OAuth authentication, configure oauthTokenUrl, and in credentials configure oauthClientId and oauthClientSecret.

Example:

{

"name": "my_dnn",

"type": "audiocodes-lvcsr",

"sttUrl": "wss://my.server/stt/api/v1/speech:recognizeLVCSR",

"oauthTokenUrl": "https://my.server:8443/realms/master/protocol/openid-connect/token",

"credentials": {

"oauthClientId": "service_client",

"oauthClientSecret": "T0ps3Cr3T"

}

}

The following parameter is configured per bot by the Administrator, or dynamically by the bot during conversation:

| Parameter | Type | Description |

|---|---|---|

stopRecognitionMaintainSession

|

Boolean |

Enables the bot to stop or start speech recognition.

This feature is applicable only to VoiceAI Connect Enterprise (Version 3.20-2 and later).

|

Yandex

To connect to Yandex, please contact AudioCodes for information.

Deepgram

To connect VoiceAI Connect with Deepgram's text-to-speech service, you need to provide AudioCodes with the following:

-

Deepgram API key: You can obtain an API key by either signing up for an account at Deepgram’s website, or by contacting Deepgram’s sales team.

-

Deepgram Speech Model: For a list of the models (default is 'nova-2'), see Deepgram's documentation.

The API key must be configured on VoiceAI Connect using the credentials > key parameter under the providers section.

The model must be configured on VoiceAI Connect using the using the sttModel parameter.

The provider type under the providers section must be configured to deepgram.

For example:

{

"name": "my_deepgram",

"type": "deepgram",

"credentials": {

"key": "API key from Deepgram"

}

}

The default URL to Deepgram's API is api.deepgram.com. However, you can override this URL using the sttUrl parameter.

Language Definition

To define the language, provide the appropriate BCP-47 language code from Deepgram’s documentation. This is configured on VoiceAI Connect using the language parameter.

Training Data Option

Some speech providers offer discounts on their services if the customer agrees to allow the provider to use and possibly retain their data to help train the provider's models.

Setting the allowTrainingData dynamic bot parameter to true for a bot enables this option for the TTS and/or STT service provider, allowing retention of the customer's data.

The default value is false.

Advanced Features

Deepgram offers the following speech detection features that can be configured on VoiceAI Connect or dynamically by the bot:

-

UtteranceEnd feature, configured by the bot parameter

deepgramUtteranceEndMS(see Speech customization). -

Endpointing feature, configured by the bot parameter deepgramEndpointingMS (see Speech customization).

Deepgram also supports a number of advanced features such as different models, keyword boosting, profanity filtering, and more. Review Deepgram’s features documentation for a full list.

Deepgram’s newest model Flux (Quick Start) introduces the concept of eager end-of-turn.

When enabled, VoiceAI Connect will treat eager recognition as a hypothesis. If configured, VoiceAI Connect will include Deepgram’s is_final: false (eager) message in the hypothesis. To receive hypothesis event, see Receiving speech-to-text hypothesis notification.

Eager end-of-turn parameters are detailed in: https://developers.deepgram.com/docs/flux/configuration.

These additional features are configured on VoiceAI Connect using the sttOverrideConfig field. The parameters are passed along as key-value pairs in a JSON object. For parameters that support multiple values (like keywords), provide an array of strings:

{

"sttOverrideConfig": {

"tier": "enhanced",

}

}

sttOverrideConfig. Use the sttSpeechContexts parameter instead.Connecting Deepgram using AudioCodes Live Hub

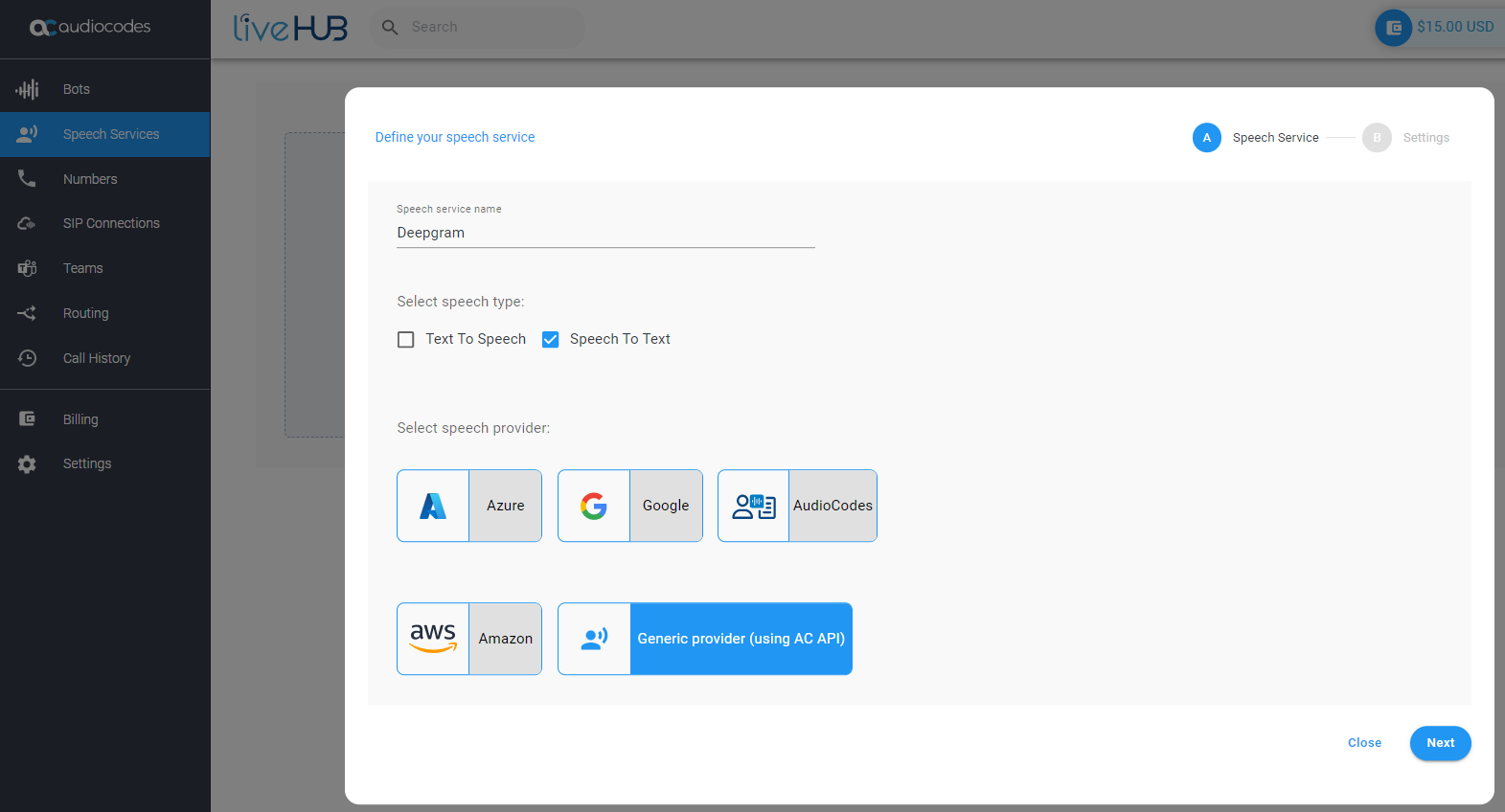

To connect to Deepgram's speech services using AudioCodes Live Hub:

-

Sign in to the Live Hub portal.

-

From the Navigation Menu pane, click Speech Services.

-

Click the + (plus) icon, and then do the following:

-

In the 'Speech service name' field, type a name for the speech service.

-

Select only the Speech To Text check box.

-

Select the Generic provider option.

-

Click Next.

-

-

In the 'Authentication Key' field, enter the token supplied by Deepgram.

-

In the 'Speech To Text (STT) URL' field, enter the URL supplied by Deepgram.

-

Click Create.

Uniphore

To connect VoiceAI Connect to the Uniphore speech-to-text service, set the type parameter in the providers section to uniphore.

You need to provide AudioCodes with the URL of your Uniphore speech-to-text endpoint instance. This URL (<address>:<port>) is configured on the VoiceAI Connect using the sttHost parameter.