Storing call transcripts and audio recordings

This section describes how to store call transcripts and audio recordings.

For different reasons (e.g., GDPR concerns), you may need to store transcripts locally, on the cloud, both locations, or, not store them at all.

-

To store transcripts to a local database, set parameter

storeTranscripttotrue. -

To store transcripts to the cloud, set parameter

saveTranscriptToStoragetotrue.

Transcript message structure

The transcript document is a core data structure used to store and manage conversational transcripts. This chapter describes its schema, key fields, and how messages are organized.

A transcript document records the sequence of messages exchanged during a conversation. It is an array of objects that includes several message types.

The following table lists the fields that are included in the Message Structure:

|

Field |

Type |

Description |

|---|---|---|

|

|

DateTime | Describes the date and time when the message was created. |

|

|

String |

Describes the message type identifier:

|

|

|

Object |

Describes the structure of the message, which varies depending on the message type (see below). |

Message Types and Structure

The following lists all the fields that are included in each type of message object.

Dialog Message

Contains user or bot dialog content.

message: Text of the message.

side: Indicates sender (Client/Bot).

botDelayMs: Milliseconds from VoiceAI Connect sending message to the bot and receiving the bot’s message.

botInterMessageDelayMs: For direct bots – milliseconds between bot messages.

ttsDelayMs: Milliseconds from VoiceAI Connect sending the request to the TTS provider to receiving the beginning of the audio stream.

sttDelayMs: Milliseconds from the user speech stopping to the recognition response from the STT provider (requires speechRecognition enabled).

turnDelayMs: Milliseconds from the user speech stopping to the start of the playback of the bot’s response (requires speechRecognition enabled).

turnId: A randomly generated UUIDv4 unique identifier for the current turn (user speech + bot response).

participant: Participant ID for agent assist sessions or conference calls.

selfGenerated: Indicates if the message was generated by the system (e.g., botNoInput events).

audioFilename: Audio filename. See sttSaveAudioToStorage and ttsSaveAudioToStorage.

mediaFormat: Audio file media format. See sttSaveAudioToStorage and ttsSaveAudioToStorage.

recognitionOutput: Speech recognition results from the STT provider.

Control Message

Used for control signals within the conversation (e.g., configuration event).

message: Control message text.

side: Indicates sender (Client/Bot).

botDelayMs: Milliseconds from VoiceAI Connect sending the request to the bot to receiving the bot’s message.

botInterMessageDelayMs: For direct bots – milliseconds between bot messages.

System Message

Represents system-level events.

message: System message text.

sysMessageType: Type of system message (Info/Error/APICall).

parameters: Additional parameters.

Raw Bot Message

All messages passed between the bot and VoiceAI Connect in their raw form.

message: Raw message object.

direction: Message direction (in/out).

Metadata Message

Metadata messages sent from the bot to Agent Assist applications.

metadataMessage: The complete metadata object.

Storing call transcripts locally

You can enable VoiceAI Connect to store call transcripts locally that is performed by the speech-to-text provider. You can store call transcripts for all bots or per bot. The call transcripts are stored in the VoiceAI Connect's database and can be viewed by AudioCodes Administrator in the VoiceAI Connect's Web-based management tool.

How to use it?

The following table lists the parameter used for storing call transcripts:

|

Parameter |

Type |

Description |

|---|---|---|

|

Boolean |

Enables VoiceAI Connect to store call transcripts (received from the speech-to-text provider) per bot.

|

Retention and Privacy

Transcript documents are configured to expire according to the global parameter transcriptsRetentionSeconds (default: 24 hours) after their last update.

Transcripts can be disabled using the global parameter storeTranscript and the bot parameter storeTranscript.

For hiding sensitive user information, see sensitiveInfoOnTranscript.

Storing utterances on Microsoft's Azure Blob storage account

You can enable VoiceAI Connect to save utterances remotely on your Microsoft's Azure Blob storage account. This includes storing both the audio (speech) recordings and the call transcript of the bot conversation. This feature can be useful for checking and troubleshooting the accuracy of the speech-to-text and text-to-speech results, supplied by the speech service provider.

You can configure VoiceAI Connect to store either the speech-to-text results, text-to-speech results, or both. This storage feature is configured per bot.

Access and transfer of audio recoding and transcript files to the Azure Blob storage is secured using HTTPS.

This feature is applicable only to VoiceAI Connect Enterprise (Version 2.8 and later).

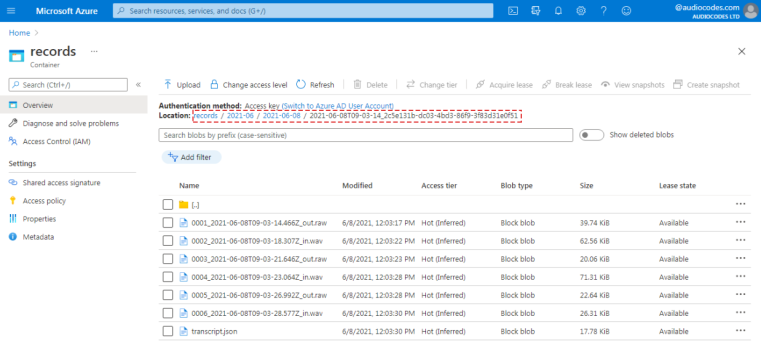

For each call, all its audio recording files and the call transcript file are saved on Azure Blob storage, under a dedicated folder in the following folder path:

<Storage Folder Name>

<Bot Name>

<yyyy-mm>

<yyyy-mm-dd>

<yyyy-mm-ddT><Conversation Time in hh-mm-ss format>_<Conversation ID>

For example: records/MyPizzaBot/2021-06/2021-06-08/2021-06-08T05-53-14_3f21a6b3-c300-43a2-b694-d9530b5a62cf

The audio recordings files are saved as .raw or .wav files (as received from or sent to the speech service provider) with the following file name: <sequential number>-<yyyy-mm-dd>T<conversation time as hh-mm-ss>Z_<"in" if end user; "out" if bot (i.e., text-to-speech result)>. For example: 0001-2021-06-08T05-53-14.466Z_in.wav

The call transcript is saved in JSON format and given the file name "transcript.json". This file includes the transcript of the entire conversation. Dialog messages where the "side" parameter has the value "Bot", indicate call transcripts from the bot. For example:

{

"timestamp": "2021-06-20T12:33:24.457Z",

"type": "dialogMessage",

"dialogMessage": {

"message": "Hi, I'm your welcome prompt",

"side": "Bot",

"ttsDelayMs": 404,

"botDelayMs": 16,

"audioFilename": "SpeechMockBot/2021-06/2021-06-20/2021-06-20T12-33-23_3f21a6b3-c300-43a2-b694-d9530b5a62cf/0001_2021-06-20T12-33-24.456Z_out.raw",

"mediaFormat": "raw/lpcm16_8"

}

}

Dialog messages where the "side" parameter has the value "Client", indicate call transcripts from the user (i.e., speech-to-text result). This transcript also shows the possible recognitions and their confidence levels. For example:

{

"timestamp": "2021-06-20T12:33:30.134Z",

"type": "dialogMessage",

"dialogMessage": {

"message": "Hello.",

"side": "Client",

"sttDelayMs": 2266,

"audioFilename": "SpeechMockBot/2021-06/2021-06-20/2021-06-20T12-33-23_3f21a6b3-c300-43a2-b694-d9530b5a62cf/0002_2021-06-20T12-33-27.310Z_in.wav",

"mediaFormat": "wav/lpcm16_8",

"recognitionOutput": {

"confidence": 0.9490645,

"recognitionOutput": {

"Id": "02dd8d44e5ee48c292cd36286ac111ef",

"RecognitionStatus": "Success",

"Offset": 8300000,

"Duration": 6800000,

"DisplayText": "Hello.",

"NBest": [

{

"Confidence": 0.9490645,

"Lexical": "hello",

"ITN": "hello",

"MaskedITN": "hello",

"Display": "Hello."

},

{

"Confidence": 0.37945044,

"Lexical": "helo",

"ITN": "helo",

"MaskedITN": "helo",

"Display": "helo"

},

{

"Confidence": 0.6942735,

"Lexical": "hell oh",

"ITN": "hell oh",

"MaskedITN": "hell oh",

"Display": "hell oh"

},

{

"Confidence": 0.6183256,

"Lexical": "hell o",

"ITN": "hell o",

"MaskedITN": "hell o",

"Display": "hell O"

},

{

"Confidence": 0.48436967,

"Lexical": "ello",

"ITN": "ello",

"MaskedITN": "ello",

"Display": "ello"

}

]

},

"text": "Hello.",

"utteranceEndOffset": 1510

}

}

}

An example of stored audio recording files and call transcript file of a specific bot conversation on Azure Blob storage is shown below:

Note: The utilization of your Azure Blob storage may increase with all the saved audio recording and call transcript files. Therefore, make sure that you have sufficient storage capacity. You can free up storage by deleting old and unnecessary data, and by disabling this feature when you have finished troubleshooting.

How to use it?

This feature can be configured by the VoiceAI Connect Administrator using the following bot parameters:

|

Parameter |

Type |

Description |

|---|---|---|

|

String |

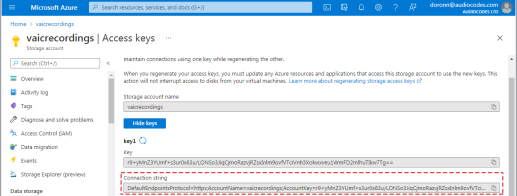

Defines the Azure connection key for accessing the Azure Blob storage account. This information is provided by the Customer, which is obtained from the Access keys page > Connection string field in Azure, as shown in the following example:

|

|

|

String |

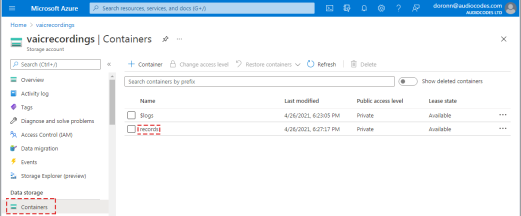

Defines the name of the Azure container that contains the Azure Blob storage folder. This information is provided by the Customer, which is obtained from the Containers page in Azure, as shown in the following example:

|

|

|

Boolean |

Enables VoiceAI Connect to store audio recordings received from the speech-to-text provider, per bot.

|

|

|

Boolean |

Enables VoiceAI Connect to store audio recordings received from the text-to-speech provider, per bot.

|

|

|

Boolean |

Enables VoiceAI Connect to store call transcripts received from the text-to-speech provider and speech-to-text provider, per bot.

Note: By default, transcripts are saved to a local database. If you don’t want to save transcripts to a local database, configure the This parameter is applicable only to VoiceAI Connect Enterprise Version 3.0.011 and later.

|

|

|

Boolean |

The following parameter is configured for bots by the administrator. When set to true, all speech-to-text (STT) and text-to-speech (TTS) recordings will include a WAV header, even when the original media format is in raw format. By default, the recordings retain the format of their source.

This parameter is applicable only to VoiceAI Connect Enterprise Version 3.24.4 and later.

|

Below shows a configuration example for storing call transcripts and audio recordings on Azure Blob for "MyPizzaBot":

{

"name": "MyPizzaBot",

"credentials": {

"botSecret": "secret",

"recordingAzureConnectionString": "DefaultEndpointsProtocol=https;AccountName=myaccount;AccountKey=MyKey;EndpointSuffix=core.windows.net"

},

"recordingAzureContainer": "records",

"sttSaveAudioToStorage": true,

"ttsSaveAudioToStorage": true,

"saveTranscriptToStorage": true

}

Storing streams and transcript on AWS S3 storage

You can enable VoiceAI Connect to save streams and transcript on AWS S3 storage. This feature can be useful when the VoiceAI Connect local storage is limited or hard to reach. When the S3 bucket and the session manager are inside AWS infrastructure, session manager can leverage the IAM identification method instead of explicit credentials

How to use it?

This feature can be configured by the VoiceAI Connect Administrator using the following bot parameters:

|

Parameter |

Type |

Description |

|---|---|---|

|

String |

Defines the reference for the AWS provider. |

|

|

String |

Defines the AWS S3 bucket name. |

Bot configuration example:

{

"name": "SpeechMockBot",

. . .

"credentials": {

"botSecret": "secret"

},

. . .

"sttSaveAudioToStorage": true,

"ttsSaveAudioToStorage": true,

"recordingAzureContainer": "records",

"recordingAwsBucket": "s3BucketName",

"recordingAwsProvider": "awsBucketProvider",

"recordingAwsProvider": "s3_aws"

}

Storing transcript and utterances recording on Google Cloud

You can enable VoiceAI Connect to save transcript and call utterances on Google Cloud Platform (GCP) storage. This feature can be useful when the VoiceAI Connect local storage is limited or hard to reach. When the storage bucket and the session manager are inside GCP infrastructure, session manager can leverage the IAM identification method instead of explicit credentials.

How to use it?

This feature can be configured by the VoiceAI Connect Administrator using the following bot parameters:

|

Parameter |

Type |

Description |

|---|---|---|

|

String |

Defines the reference for the GCP provider. NOTE: When using IAM, the recordingGoogleProvider field is not required. |

|

|

String |

Defines the GCP storage bucket name. |

Bot configuration example without IAM:

{

"providers": [

{

"name": " GCPBucketProvider",

"type": "google",

"projectId": "<PROJECT_ID>",

"credentials": {

"privateKey": "<PRIVATE_KEY>",

"clientEmail": "<CLIENT_EMAIL>"

}

}

],

"bots": [

{

"name": "ExampleBot",

. . .

"credentials": {

"botSecret": "secret",

. . .

},

"sttSaveAudioToStorage": true,

"ttsSaveAudioToStorage": true,

"recordingGoogleBucket": "googleBucketName",

"recordingGoogleProvider": "GCPBucketProvider"

}

]

}

Bot configuration example with IAM:

{

"name": "ExampleBot",

. . .

"credentials": {

"botSecret": "secret",

. . .

},

"sttSaveAudioToStorage": true,

"ttsSaveAudioToStorage": true,

"recordingGoogleBucket": "googleBucketName",

}

]

}