Agent assist

The agent assist feature enables a bot to monitor (receive the transcript of) an ongoing conversation between a customer and a human agent.

The bot can use its internal logic to act upon the sentences being said in the conversation, by sending out-of-band messages to the human agent or to other recipients.

This functionality can used for different goals:

-

To provide assistance to a human agent as need arises:

For example, a customer may ask for bank account information from the human agent, and the bot can provide this information to the human agent. This way, the bot can assist optimizing the human agent's productivity, reducing handling time of customer inquiries.

-

To monitor the conversation for keywords:

For example, monitoring for foul language. in such a scenario, the bot can notify the human agent's supervisor.

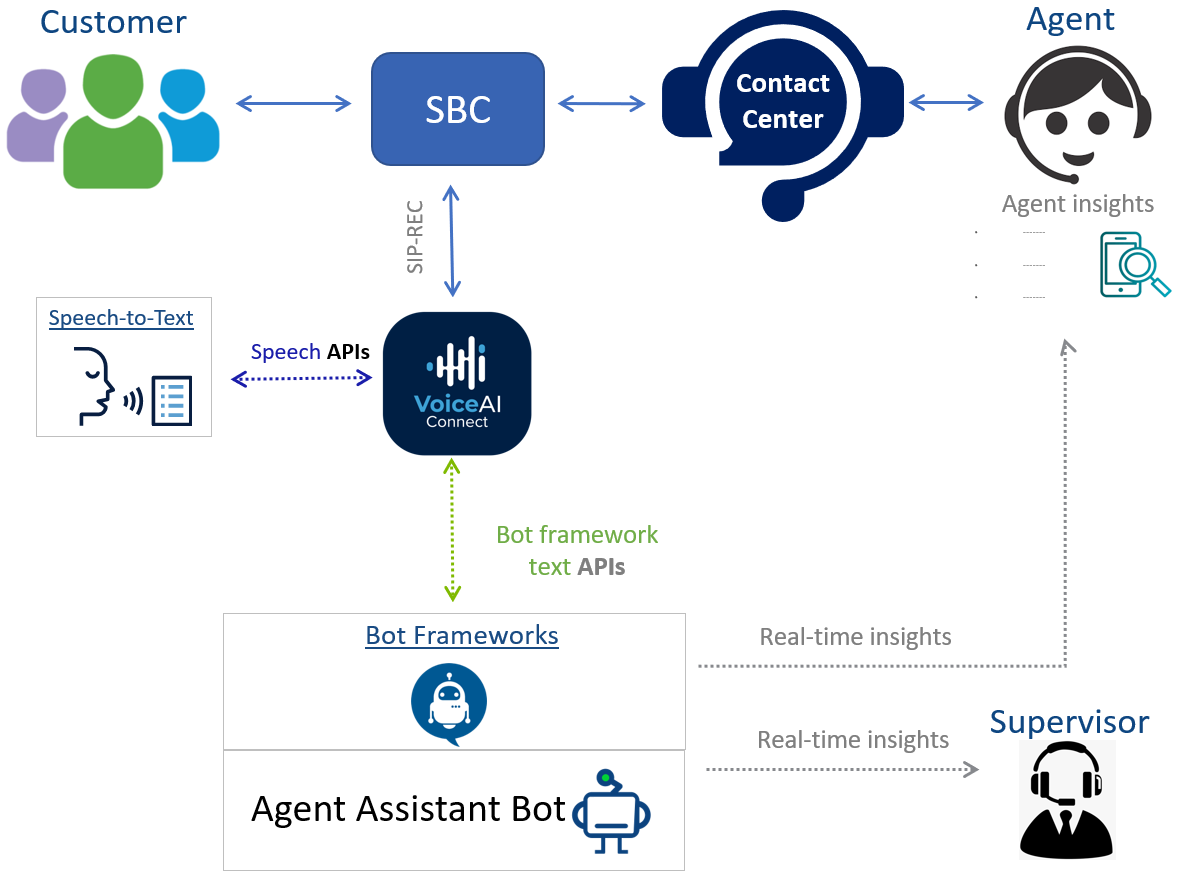

The following illustration provides an example of an agent assist implementation with VoiceAI Connect:

How do I use it?

This section describes the agent assist feature for regular bots. For integration with Google Agent Assist, see google agent assist.

There are several ways in which this can be set up. In general, there is an SBC used in the original call (we shall call this the original SBC) that is being recorded, and an SBC for the VoiceAI Connect. An AudioCodes original SBC has the capability of starting SIPRec on demand. While non- AudioCodes SBCs should be configured to start SIPRec for every call on call start.

The following functional components are required for implementing agent assist:

-

SIPREC Client (SRC) – typically, a session border controller (SBC)

-

VoiceAI Connect serving as a SIPREC Server (SRS) and providing connectivity with the agent-assist bot

-

Third-party speech-to-text service provider used by VoiceAI Connect to obtain real-time transcription of the conversation

AudioCodes offers professional services for assistance in implementing such setups.

After you have a setup with VoiceAI Connect serving as a SIPREC Server (SRS), you should route the recording sessions towards your agent-assist bot.

Bot configuration

The following configuration parameters must be configured for the agent-assist bot:

-

bargeInshould be set totrue(so speech-to-text recognition will not stop after each recognition). -

connectOnPromptshould be set tofalse(so the conversation will be connected immediately without waiting for a bot prompt). -

userNoInputGiveUpTimeoutMSshould be set to0, to prevent the call from being dropped after a long period of silence of all of the participants.

Agent-assist conversation start

When an agent-assist conversation starts, the bot will receive the initial activity (see Inital activity page). The initial activity includes an agentConnected field, specifying whether or not the agent assist session is already in progress. In addition, the initial activity includes a participants field. When an agent-assist conversation starts, the bot will receive the initial activity (see Inital

activity page) that includes a participants field. This field is used by the bot to

identify the participants of the conversation being recorded (usually, the end-user and the human agent).

Example of the participants field:

"participants": [

{

"participant": "caller",

"uriUser": "A",

"uriHost": "example.com",

"displayName": "My name"

},

{

"participant": "callee",

"uriUser": "12345",

"uriHost": "example.com"

}

]

The following table lists the fields of each participant in the participants array:

|

Property |

Type |

Description |

|---|---|---|

|

|

String |

Identifier of the participant. The identifier is obtained from the The following example shows a <participant id="+123456789" session="0000-0000-0000-0000-b44497aaf9597f7f"> <nameID aor="+123456789@example.com"></nameID> <ac:role>caller</ac:role> </participant> If no The identifier is assured to be unique among the participants of the conversation. |

|

|

String |

User-part of the URI of the participant.

The value is obtained from the user-part of the |

|

|

String |

Host-part of the URI of the participant.

The value is obtained from the host-part of the |

|

|

String |

Display name of the participant. The value is obtained from the 'name' sub-element of the 'nameID' element in the SIPRec XML body. |

Starting recognition

For receiving transcript of a specific participant, your bot should send a startRecognition event

indicating the identifier of the participant. If you wish to receive the transcript of both participants, two

startRecognition events should be sent. To reduce costs of unnecessary activation of speech-to-text, the speech-to-text

engine will not start recognition of an audio stream until a startRecognition activity is received.

See Sending activities page for instructions on how to send events using your bot framework.

startRecognition event in order to receive

textual messages of the conversation.If you wish to stop receiving transcript of a participant during the conversation, your bot can send a

stopRecognition event.

Example of a startRecognition event:

{

"type": "event",

"name": "startRecognition",

"activityParams": {

"targetParticipant": "caller"

}

}

{

"type": "event",

"name": "startRecognition",

"channelData": {

"activityParams": {

"targetParticipant": "caller"

}

}

}

Add a Custom Payload fulfillment with the following content:

{

"activities": [{

"type": "event",

"name": "startRecognition",

"activityParams": {

"targetParticipant": "caller"

}

}]

}

Add a Custom Payload response with the following content:

{

"activities": [{

"type": "event",

"name": "startRecognition",

"activityParams": {

"targetParticipant": "caller"

}

}]

}

Event parameters

The following table lists the parameters associated with startRecognition and

stopRecognition events:

|

Parameter |

Type |

Description |

|---|---|---|

|

String |

Defines the participant identifier for which to start or stop speech recognition. |

Optimizing speech-to-text activation

The Speech customization feature can be used to activate the speech-to-text only when the participant is talking. This can considerably reduce costs for agent-assist calls.

Our recommendation is to use the enabled value for the speechDetection parameter for agent-assist calls. See the feature documentation for details.

Receiving transcript

The bot will receive the transcript as regular textual message (see Receiving user's speech). However, to allow the bot to

distinguish between the messages of the different participants, each message will have a

participant field indicating the participant identifier and, optionally, a participantUriUser field indicating the user part of the participant.

For example:

{

"type": "message",

"text": "Hi, how are you doing?",

"parameters": {

"participant": "caller",

"participantUriUser": "Alice"

}

}

{

"type": "message",

"text": "Hi, how are you doing?",

"channelData": {

"participant": "caller",

"participantUriUser": "Alice"

}

}

The text-message is sent as text input.

The

participant and participantUriUser fields are sent as payload parameters.

The text-message is sent as text input.

The

participant and participantUriUser fields are sent as payload parameters.

the participant is also set as an input context with the name

vaig-participant-<id>, for example: vaig-participant-caller. This can be used

for filtering the matching intents. See Dialogflow documentation for

more details.

Sending messages by the bot

When the bot needs to send a notification to the human agent or to another recipient, it can do so in several ways:

-

Directly send the messages from the bot's implementation to the recipient application (e.g, the call-center application).

The bot should include an identifier of the conversation. Such an identifier can be retrieved from the Initial message that is sent by VoiceAI Connect to the bot.

Only this method is supported on Live Hub. -

Send HTTP POST requests using the Sending metadata feature.

VoiceAI Connect can configured to include an identifier of the conversation in the URL of the POST request.

-

Send SIP INFO request to the human agent using the Sending metadata feature.

The bot messages will be passed over the SIP call, which is only suitable for small messages.

This option requires support from the SRC SBC.

-

For agent clients using the agent assist API (Agent-assist API), send metadata events using the sending metadata feature.

The bots messages will be sent to the agent client via the agent assist API.

-

The agent assist API allows the agent assist application to send and receive metadata to and from the bot via VoiceAI Connect. This API uses socket.io WebSocket (see below for details).

Interactive agent assist

The interactive agent-assist mode allows the assistant bot to play audio messages to the customer or the human agent in specific scenarios. This mode is designed to enhance conversational experiences by addressing cases where audio playback is beneficial.

This mode is available as a preview. For more information, please contact AudioCodes Support Team.

This mode is available only for Customers using AudioCodes Session Border Controller (SBC).

This feature is applicable only to VoiceAI Connect Enterprise from VAIC-E-3.24 and later.

Example use cases:

-

Live translation: The assistant bot translates each participant’s speech and plays it to the other participant.

-

Proactive announcements: The assistant bot can play a message to one or both participants during the conversation. For example, if the bot detects fraud, it can alert the agent via voice.

-

Personally identifiable information (PII) concealing: The assistant bot can "mute" sensitive information shared by the customer (e.g., preventing the agent from hearing the customer’s credit card number).

When operating in interactive agent-assist mode, the bot achieves the following capabilities:

-

Playback of messages to either participant or both participants.

-

Control over the playback volume to each participant.

-

Control over the audio volume each participant hears from the other.

-

Configurable language settings for each participant, independently.

API for agent applications

Agent assist applications API supports agents using frameworks such as Genesys and Microsoft Teams.

The agent establishes a socket.io WebSocket connection to VoiceAI Connect. After the WebSocket is set up, the agent sends an init message containing the agent’s phone number and other agent info.

During an active call, the agent can initiate an agent assist session by sending a getAgentAssist message to the service.

The bot can send metadata events to VoiceAI Connect, which are forwarded as-is to the agent application via the agent assist API.

If the agent reloads, it sends a new init. The service recognizes the existing call and responds with the agent’s existing sessions so that the agent can continue from where it dropped off.

For API protocol details, see Agent Assist Applications API

Bot Configuration

The following additional parameters should be configured when using the Agent Assist Applications API:

-

sendEventsToBotshould be set for agent assist using agent assist API, the parameter sendEventsToBot should include value "agentConnected". -

agentAssistApiModemust be set to one of the following values:-

"

enabled-dynamic-siprec" - This will start the SIPRec dynamically only after the agent assist client requests an agent assist session for the call. This requires the original SBC to be an AudioCodes SBC that supports siprec REST command -

"

enabled" - This will assume that the SIPRec session is started automatically on the call connect.

-

Receiving agent session status

When using Agent Assist Applications API, the bot receives an agentConnected field in the start message (for more information, see Call initiation), and an agentConnected event when an agent assist client starts/stops the session for this call.

The bot can choose to begin recognition only once an agent assist session is active and can stop recognition if the agent session ends.

Event format:

{

"type": "event",

"name": "agentConnected",

"value": {

"connected": true,

"agentId": "agent5",

"metadataToBot": {}

}

}

{

"type": "event",

"name": "agentConnected",

"value": {

"connected": true,

"agentId": "agent5",

"metadataToBot": {}

}

}

Add a Custom Payload fulfillment with the following content:

{

"queryInput": {

"event": {

"name": "agentConnected",

"parameters": {

"connected": true,

"agentId": "agent5",

"metadataToBot ": {}

}

}

}

}

Add a Custom Payload response with the following content:

{

"queryInput": {

"event": {

"name": "agentConnected",

"parameters": {

"connected": true,

"agentId": "agent5",

"metadataToBot ": {}

}

}

}

}

{

"type": "event",

"name": "agentConnected",

"value": {

"connected": true,

"agentId": "agent5",

"metadataToBot": {}

}

}

{

"type": "event",

"name": "agentConnected",

"value": {

"connected": true,

"agentId": "agent5",

"metadataToBot": {}

}

}