Speech customization

You can customize various speech features, as discussed in below.

Language and voice name

Speech-to-text and text-to-speech services interface with the user using a selected language (e.g., English US, English UK, or German).

Text-to-speech services also use a selected voice to speak to the user (e.g., female or male). In addition, you can also integrate VoiceAI Connect with Azure's Custom Neural Voice text-to-speech feature, which allows you to create a customized synthetic voice for your bot.

How to use this?

This feature is configured per bot by the Administrator, or dynamically by the bot during conversation:

|

Parameter |

Type |

Description |

|---|---|---|

|

String |

Defines the language (e.g., "en-ZA" for South African English) of the bot conversation and is used for speech-to-text and text-to-speech functionality. The value is obtained from the service provider.

Note:

|

|

|

String |

Defines the language (e.g., "en-ZA" for South African English) of the bot conversation and is used for the speech-to-text service. The parameter is required if different languages are used for the text-to-speech and speech-to-text services. If these services use the same language, you can use the The value is obtained from the service provider. VoiceAI Connect Enterprise supports this parameter from Version 3.2 and later. |

|

|

String |

Defines the language (e.g., "en-ZA" for South African English) of the bot conversation and is used for the text-to-speech service. The parameter is required if different languages are used for the text-to-speech and speech-to-text services. If these services use the same language, you can use the The value is obtained from the service provider. VoiceAI Connect Enterprise supports this parameter from Version 3.2 and later. |

|

|

String |

Defines the voice name for the text-to-speech service.

Note: This string is obtained from the text-to-speech service provider and must be provided to AudioCodes, as discussed in Text-to-speech service information. |

|

|

String |

Defines a case-sensitive regular expression (regex) that determines which |

|

|

String |

Defines the AWS text-to-speech voice as Neural Voice or Standard Voice.

Note:

VoiceAI Connect Enterprise supports this parameter from Version 3.0 and later.

|

|

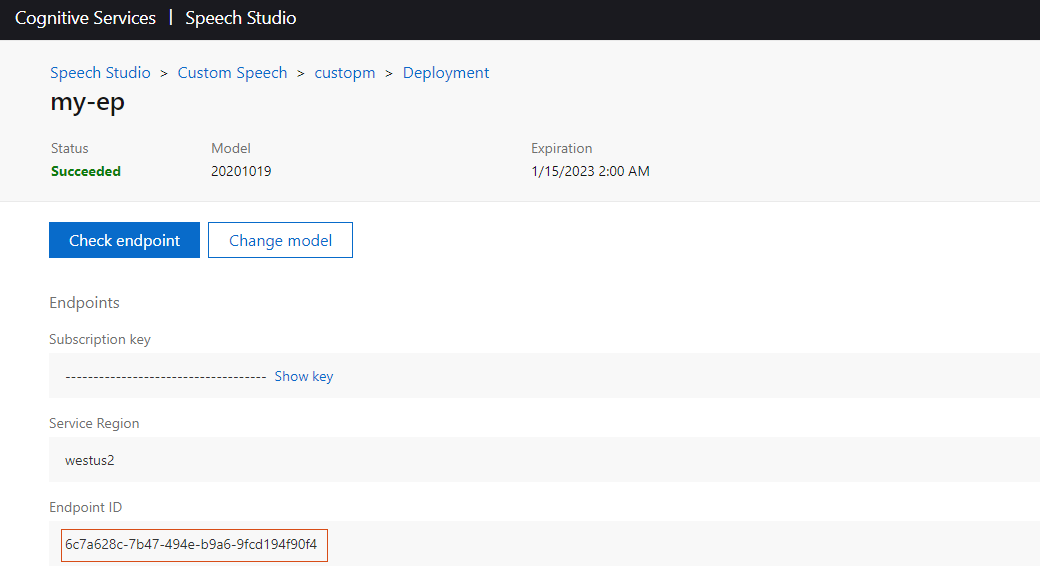

ttsDeploymentId

|

String |

Defines the customized synthetic voice model for Azure's text-to-speech Custom Neural Voice feature. Once you have deployed your custom text-to-speech endpoint on Azure, you can integrate it with VoiceAI Connect using this parameter. For more information on Azure's Custom Neural Voice feature, click here. By default, this parameter is undefined. Note:

VoiceAI Connect Enterprise supports this feature from Version 2.6 and later.

|

Language recognition for speech to text (Microsoft)

At any stage of the call, the bot can dynamically trigger a language detection (language identification) for speech to text. Only the next utterance spoken after the trigger will be analyzed. The bot attempts to recognize the language spoken by comparing it to up to ten possible alternative languages, and by meeting or exceeding a set confidence level.

How to use this?

This feature is configured per bot by the Administrator, or dynamically by the bot during conversation:

|

Parameter |

Type |

Description |

|---|---|---|

|

Boolean |

Enables the language detection feature.

|

|

|

String |

Sets the language detection mode (Azure only). They can be one of the following values:

VoiceAI Connect Enterprise supports this feature from Version 3.8.1 and later.

|

|

|

Array of Objects |

Defines a list of up to 10 alternative languages (in addition to the current language) that will be used to detect the language spoken. Object example ( {

"language": "de-DE",

"voiceName": "de-DE-KatjaNeural",

"sttContextId": "<context ID value>"

}

Note: If VoiceAI Connect Enterprise supports up to ten languages (previously up to three) from Version 3.8.1 and later.

|

|

|

Number |

Defines the confidence level that a language recognition must reach to enable a language switch. For example, a value of 0.35 indicates that a 35% or above confidence level of a language match must be reached to enable a language switch. The valid value range is 0.1 to 1. |

|

|

Boolean |

Enables the language switch to an alternate language if

|

For Example:

{

"languageDetectionActivate": true,

"alternativeLanguages": [

{

"language": "de-DE",

"voiceName": "de-DE-KatjaNeural"

},

{

"language": "fr-FR",

"voiceName": "fr-FR-DeniseNeural"

}

],

"languageDetectionAutoSwitch": true

}

Continuous automatic speech recognition (ASR)

By default, the speech-to-text service recognizes the user's end of utterance according to the duration of detected audio silence (or by other means). Each recognized utterance is sent by VoiceAI Connect to the bot as a separate textual message.

Sometimes, the detection of end of utterance occurs too quickly and the user is cut off while speaking. For example, when the user replies with a long description that is comprised of several sentences. In such cases, all the utterances should be sent together to the bot as one long textual message.

Continuous automatic speech recognition enables VoiceAI Connect to collect all the user's utterances. When it detects silence for a user-defined duration or a configured DTMF key (e.g., the # pound key) is pressed by the user, it concatenates the multiple speech-to-text detected utterances, and then sends them as a single textual message to the bot. In this way, the user can configure a longer silence timeout.

This feature is controlled by the Administrator, but the bot can dynamically control this mode during the conversation.

How to use it?

|

Parameter |

Type |

Description |

|---|---|---|

|

Boolean |

Enables the Continuous ASR feature. Continuous ASR enables VoiceAI Connect to concatenate multiple speech-to-text recognitions of the user and then send them as a single textual message to the bot.

|

|

|

String |

This parameter is applicable when the Continuous ASR feature is enabled. Defines a special DTMF key, which if pressed, causes the VoiceAI Connect to immediately send the accumulated recognitions of the user to the bot. For example, if configured to "#" and the user presses the pound key (#) on the phone's keypad, the device concatenates the accumulated recognitions and then sends them as one single textual message to the bot. The default is "#". Note: Using this feature incurs an additional delay from the user’s perspective because the speech is not sent immediately to the bot after it has been recognized. To overcome this delay, configure the parameter to a value that is appropriate to your environment. |

|

|

Number |

This parameter is applicable when the Continuous ASR feature is enabled. Defines the automatic speech recognition (ASR) timeout (in milliseconds). The timer is triggered when a recognition is received from the speech-to-text service. When VoiceAI Connect detects silence from the user for a duration configured by this parameter, it concatenates all the accumulated speech-to-text recognitions and sends them as one single textual message to the bot. The valid value range is 500 (i.e., 0.5 second) to 60000 (i.e., 1 minute). The default is 3000. Note: The parameter's value must be less than or equal to the value of the |

|

|

Number |

This parameter is applicable when the Continuous ASR feature is enabled. Defines the timeout (in milliseconds) between hypotheses and between the last hypothesis to the recognition. This timer is triggered when a hypothesis is received from the speech-to-text service. When the timer expires, the last utterance from the user is discarded and the previous speech-to-text recognitions are sent to the bot. The valid value range is 500 to 6000. The default is 3000. VoiceAI Connect Enterprise supports this feature from Version 2.4 and later.

|

Speech-to-text models and contexts

To improve the accuracy of speech recognition, some speech-to-text service providers such as Google Cloud allows you to dynamically associate speech-to-text contexts with each speech-to-text query. They also allow you to adapt to the specific use case jargon.

-

List of Phrases: VoiceAI Connect can be configured with a list of phrases or words that is passed to the speech-to-text service (Google's Cloud or Microsoft Azure) as "hints" for improving the accuracy of speech recognitions. For example, whenever a speaker says "weather", you want the speech-to-text service to transcribe it as "weather" and not "whether".

-

Speech Adaption using Boosts : Google's speech adaptation boost feature allows you to increase the recognition model bias, by assigning more weight to some phrases than others. For example, most of the bot users in a specific deployment may be saying the phrase "fair" more often than "fare". To increase the probability of the speech-to-text service recognizing the word as "fair", VoiceAI Connect be configured to apply a higher boost value (or weight) to the word "fair" in the configured speech context phrase.

-

Speech Recognition using Google Class Tokens: Google's classes represent common concepts that occur in natural language, such as monetary units and calendar dates. A class allows you to improve transcription accuracy for large groups of words that map to a common concept, but that don't always include identical words or phrases. For example, the audio data may include recordings of people saying their street address. One may say "my house is 123 Main Street, the fourth house on the left." In this case, you want Speech-to-Text to recognize the first sequence of numerals ("123") as an address rather than as an ordinal ("one-hundred twenty-third"). However, not all people live at "123 Main Street" and it's impractical to list every possible street address in a Speech Context object. Instead, you can use a class token (e.g., "$ADDRESSNUM") in the phrases field of the Speech Context object to indicate that a street number should be recognized no matter what the number actually is. VoiceAI Connect can be configured with multiple phrases for speech contexts using these Google's class tokens.

Google's Speech-to-Text service also provides specialized transcription models based on audio source, for example, phone calls or videos. This allows it to produce more accurate transcription results.

How to use it?

|

Parameter |

Type |

Description |

|---|---|---|

|

String |

"

Note:

|

|

|

String |

AudioCodes generic speech-to-text API: This optional parameter is used to pass whatever data is needed from the bot to the speech-to-text. Third-party vendors can use this to pass any JSON object they want to implement. Note: Only relevant for AC-STT-API. VoiceAI Connect Enterprise supports this feature from Version 3.0 and later. |

|

|

Array of Objects |

When using Google's Cloud or Microsoft Azure speech-to-text services, this parameter controls Speech Context phrases. The parameter can list phrases or words that is passed to the speech-to-text service as "hints" for improving the accuracy of speech recognitions. For example, whenever a speaker says "weather" frequently, you want the speech-to-text service to transcribe it as "weather" and not "whether". To do this, the parameter can be used to create a context for this word (and other similar phrases associated with weather). For Google's Cloud speech-to-text service, you can also use the parameter to define the boost number (0 to 20, where 20 is the highest) for context recognition of the specified speech context phrase. Speech-adaptation boost allows you to increase the recognition model bias by assigning more weight to some phrases than others. For example, when users say "weather" or "whether", you may want the speech-to-text to recognize the word as "weather". For more information, see https://cloud.google.com/speech-to-text/docs/context-strength. You can also use Google's class tokens to represent common concepts that occur in natural language, such as monetary units and calendar dates. A class allows you to improve transcription accuracy for large groups of words that map to a common concept, but that don't always include identical words or phrases. For example, the audio data may include recordings of people saying their street address. One may say "my house is 123 Main Street, the fourth house on the left." In this case, you want Speech-to-Text to recognize the first sequence of numerals ("123") as an address rather than as an ordinal ("one-hundred twenty-third"). However, not all people live at "123 Main Street" and it's impractical to list every possible street address in a SpeechContext object. Instead, you can use a class token in the phrases field of the SpeechContext object to indicate that a street number should be recognized no matter what the number actually is. For example: {

"sttSpeechContexts": [

{

"phrases": [

"weather"

],

"boost": 18

},

{

"phrases": [

"whether",

],

"boost": 2

},

{

"phrases": [

"fair"

]

},

{

"phrases": [

"$ADDRESSNUM"

]

}

]

}

Note:

|

|

|

Array of Strings |

Enables the use of Google speech-to-text custom model adaptation for speech-to-text calls. For more information on how to create model adaptation, go to Google's documentation. VoiceAI Connect Enterprise supports this feature for Google speech-to-text provider only, from Version 3.12 and later. |

|

|

Boolean |

Prevents the speech-to-text response from the bot to include punctuation marks (e.g., periods, commas and question marks).

Note: This feature requires support from the speech-to-text service provider. |

|

|

String |

Defines the Azure speech-to-text recognition mode. Can be one of the following values:

Note: The parameter is applicable only to the Microsoft Azure speech-to-text service. |

|

|

String |

Defines the Google speech-to-text interaction type. Can be one of the following values:

By default, the interaction type is not specified. For more information, see Google speech-to-text documentation. Note: The parameter is applicable only to the Google speech-to-text service. |

|

sttModel

|

String |

Defines the audio transcription model for Google Speech-to-Text.

By default, the parameter is undefined. For more information on Google's transcription models, click here. Note:

VoiceAI Connect Enterprise supports this feature from Version 2.6 and later.

|

sttEnhancedModel

|

Boolean |

Enables Google's Speech-to-Text enhanced speech recognition model. There are currently two enhanced models - phone call and video. These models have been optimized to more accurately transcribe audio data from these specific sources.

If configured to For more information on Google's enhanced speech recognition model, click here. Note:

VoiceAI Connect Enterprise supports this feature from Version 2.6 and later.

|

|

Array of Strings |

This parameter was deprecated in Version 2.4. Please use the

sttSpeechContexts parameter instead.When using Google's Cloud speech-to-text service, this parameter controls Speech Context phrases. The parameter can list phrases or words that is passed to the speech-to-text service as "hints" for improving the accuracy of speech recognitions. For more information on speech context (speech adaptation) as well details regarding tokens (class tokens) that can be used in phrases, go to https://cloud.google.com/speech-to-text/docs/speech-adaptation. For example, whenever a speaker says "weather" frequently, you want the speech-to-text service to transcribe it as "weather" and not "whether". To do this, the parameter can be used to create a context for this word (and other similar phrases associated with weather): "sttContextPhrases": ["weather"] Note:

|

|

|

Number |

This parameter was deprecated in Version 2.4. Please use the

sttSpeechContexts parameter instead.Defines the boost number for context recognition of the speech context phrase configured by For more information, see https://cloud.google.com/speech-to-text/docs/context-strength. Note:

|

|

|

String |

This parameter was deprecated in Version 2.2 and replaced by the |

Text-to-speech synthesis models for ElevenLabs

ElevenLabs offer various models for text-to-speech synthesis.

For more information on ElevenLabs models, refer to ElevenLabs documentation.

How to use it?

|

Parameter |

Type |

Description |

|---|---|---|

|

String |

Defines the model for text-to-speech synthesis. Note:

VoiceAI Connect Enterprise supports this feature from Version 3.22 and later.

|

Speech-to-text detection features for Deepgram

Deepgram offers various speech-to-text detection features.

How to use it?

These features are controlled by the VoiceAI Connect Administrator or dynamically by the bot:

| Parameter | Type | Description |

|---|---|---|

sttModel

|

String |

Defines the speech-to-text model.

This feature is applicable only to VoiceAI Connect Enterprise (Version 3.22 and later).

|

|

Number |

Defines the time to wait (ms) between transcribed words (i.e., detects gaps of pauses between words) before Deepgram's UtteranceEnd feature sends the UtteranceEnd message (i.e., end of spoken utterances). For more information, see Deepgram's documentation on UtteranceEnd. The default is 1000 ms.

This feature is applicable only to VoiceAI Connect Enterprise (Version 3.22 and later).

|

|

|

Number |

Defines the length of time (ms) that Deepgram's Endpointing feature uses to detect whether a speaker has finished speaking. When pauses in speech are detected for this duration, the Deepgram model assumes that no additional data will improve it's prediction of the endpoint and it returns the transcription. For more information, see Deepgram's documentation on Endpointing. The default is 500 ms.

This feature is applicable only to VoiceAI Connect Enterprise (Version 3.22 and later).

|

Nuance advanced speech services (Legacy WebSocket API)

When using Nuance's speech-to-text services (WebSocket API for Krypton), you can configure the bot to use Nuance's special features to transcribe text. Nuance Krypton uses a data pack and optional dynamic content. The data pack provides general information about a language or locale, while optional items such as domain language models (LM), wordsets and speaker profiles specialize Krypton's recognition abilities for a particular application or environment.

For more information (description and configuration) about these advanced Nuance features, please contact Nuance.

VoiceAI Connect Enterprise supports this feature from Version 2.6 and later.

How to use it?

This feature is controlled by the VoiceAI Connect Administrator, or dynamically by the bot during the conversation (bot overrides VoiceAI Connect configuration):

|

Parameter |

Type |

Description |

|---|---|---|

|

Array of Objects |

Defines the value of the sesssionObjects field of the CreateSession message. The sesssionObjects field is an array of objects -- wordsets, domain LM, and/or speaker profiles -- to load for the duration of the session. In-call updates will send a Load message with this value in the objects field. For example, for a Nuance domain-specific language model (LM): nuanceSessionObjects = [

{

"id":"dlm_bank",

"type":"application/x-nuance-domainlm",

"url":"https://host/dropbox/krypton/banking-dlm.zip",

"weight":100

},

{

"id":"ws_places",

"type":"application/x-nuance-wordset",

"body":"{\"PLACES\":[{\"literal\":\"Abington Pigotts\"},{\"literal\":\"Primary Mortgage Insurance\",\"spoken\":[\"Primary Mortgage Insurance\"]}]}"

}

]

Note: This parameter is applicable only to Nuance speech-to-text. |

|

|

Object |

Fields in this object can set the fields builtinWeights, domainLmWeights, useWordSets and recognitionParameters (except audioFormat) of the Recognition message. For example, to set the formatingType field of the recognitionParameters field of the Recognize message to "num_as_digits": nuanceRecognizeFieldsObject = {

"recognitionParameters":{

"formattingType":"num_as_digits"

}

}

Note: This parameter is applicable only to Nuance speech-to-text. |

|

|

Boolean |

Sets the Nuance Krypton session parameter enableCallRecording, which enables Nuance's call recording and logging speech-to-text feature.

Note: This parameter is applicable only to Nuance speech-to-text. |

Nuance advanced speech services (gRPC API and Nuance Mix)

When using Nuance's speech-to-text services (gRPC for Krypton and Nuance Mix), you can configure the bot to use Nuance's special features to transcribe text.

Nuance Krypton and Nuance Mix use a data pack and optional dynamic content. The data pack provides general information about a language or locale, while optional items such as domain language models (LM), wordsets and speaker profiles specialize Krypton's and Nuance Mix's recognition abilities for a particular application or environment. For more information (description and configuration) about these advanced Nuance features, please contact Nuance.

Click here for GRPC documentation.

VoiceAI Connect Enterprise supports this feature from Version 2.8 and later.

How to use it?

This feature is controlled by the VoiceAI Connect Administrator, or dynamically by the bot during the conversation (bot overrides VoiceAI Connect configuration):

|

Parameter |

Type |

Description |

|---|---|---|

|

Array of Objects |

Defines the parameters field value in the RecognitionRequest sent to the Nuance server. For example, for a Nuance word set: nuanceGRPCRecognitionResources = [

{

"inlineWordset": "{\"PLACES\":[{\"literal\":\"Abington Pigotts\"},{\"literal\":\"Primary Mortgage Insurance\",\"spoken\":[\"Primary Mortgage Insurance\"]}]}"

}

]

Note: This parameter is applicable only to Nuance speech-to-text gRPC. |

|

|

Object |

Defines the resources field value in RecognitionRequest sent to the Nuance server. For example, to set the formatting field to "num_as_digits", disable punctuation and call recording: nuanceGRPCRecognitionParameters =

{

"recognitionFlags": {

"autoPunctuate": false,

"suppressCallRecording": true

},

"formatting": {

"scheme": "num_as_digits"

}

}

Note: This parameter is applicable only to Nuance speech-to-text gRPC. |

Silence and speech detection

By default, VoiceAI Connect activates the speech-to-text service when the bot's prompt finishes playing to the user. In addition, when the Barge-In is feature is enabled, the speech-to-text service is activated when the call connects to the bot for the duration of the entire call.

VoiceAI Connect relies on automatic speech recognition (Speech-to-Text) services, which are billed for by the cloud framework provider. As billing is a function of the amount of time the connection to the speech-to-text service is open, it is desirable to minimize the duration of such connections between VoiceAI Connect and the speech-to-text service.

When a caller speaks, the speech may contain gaps such as pauses (silence) between spoken sentences. VoiceAI Connect can determine, through AudioCodes Session Border Controller's Speech Detection capability, when the caller is speaking (beginning of speech) and to activate the speech-to-text service only as needed. Disconnection from the speech-to-text service occurs when the speech-to-text service recognizes the end of the sentence.

This speech detection capability can reduce connection time to the speech-to-text service by as much as 50% (sometimes more), depending on the type and intensity of background sounds and the configuration of the system. The largest savings are realized when the bot is configured to allow “Barge-In” or when using the “Agent Assist” feature.

How to use it?

This feature is configured using the following bot parameters, which are controlled only by the Administrator:

|

Parameter |

Type |

Description |

|---|---|---|

|

String |

Enables VoiceAI Connect's speech detection feature.

Speech detection (verifying that speech is taking place) is performed on VAIC machines and uses Session Border Controller resources, while speech recognition (recognizing what words are being said) is performed by an external service.

|

|

|

Number |

Defines the timeout (milliseconds) for silence detection by the SBC of the user. If silence is detected for this duration, VoiceAI Connect will consider it as if the user is in silence. It will not start new speech-to-text service if speechDetection is enabled. The valid value is 10 to 10,000. The default is 500. |

Fast speech-to-text recognition

For some languages, the speech-to-text engine requires a longer time to decide on 'end of single utterance' (this can result in a poor user experience).

If the fast speech-to-text recognition workaround is enabled, VoiceAI Connect will trigger a recognition event after a specified time after the last hypothesis.

How to use it?

These parameters are controlled by the VoiceAI Connect Administrator, or dynamically by the bot during the conversation (bot overrides VoiceAI Connect configuration):

|

Parameter |

Type |

Description |

|---|---|---|

|

Boolean |

Enables fast speech-to-text recognition. If the parameter is enabled, use: {"singleUtterance": false }

Note: This parameter is only applicable to the following:

|

|

|

Number |

Defines the maximum time (in milliseconds) that VoiceAI Connect waits for a response from the speech-to-text provider since last hypothesis to activate recognition. The valid value is 10 to 600,000. The default is 1,000. If no new response is received between the last hypothesis and timeout expiration, VoiceAI Connect will trigger a recognition event. Note: This parameter is only applicable to the following:

|

Barge-In

The Barge-In feature controls VoiceAI Connect's behavior in scenarios where the user starts speaking or dials DTMF digits while the bot is playing its response to the user. In other words, the user interrupts ("barges-in") the bot.

By default, the Barge-In feature is disabled and VoiceAI Connect ignores user speech or DTMF input, from the detection of end of utterance until the bot has finished playing its response (or responses if the bot sends multiple consecutive response messages). Only after the bot has finished playing its message does VoiceAI Connect expect user speech or DTMF input. However, if no bot response arrives within a user-defined timeout, triggered from the detection of end of utterance, speech-to-text recognition is re-activated and the user can speak or send DTMF digits again.

When Barge-In is enabled, detection of user speech or DTMF input is always active. If the user starts to speak or presses DTMF digits while the bot is playing its response, VoiceAI Connect detects this speech or DTMF and immediately stops the bot response playback and sends the detected user utterances or DTMF to the bot. If there are additional queued text messages from the bot, they are purged from the queue.

How to use it?

These features are controlled by the VoiceAI Connect Administrator, or dynamically by the bot during the conversation (bot overrides VoiceAI Connect configuration):

|

Parameter |

Type |

Description |

|---|---|---|

|

Boolean |

Enables the Barge-In feature.

|

|

|

Boolean |

Enables barge-in on DTMF. For more information on DTMF, see Receiving DTMF digits notification. |

|

|

Number |

Defines the minimum number of words that the user must say for VoiceAI Connect to consider it a barge-in. For example, if configured to 4 and the user only says 3 words during the bot's playback response, no barge-in occurs. The valid range is 1 to 5. The default is 1. |

No user input notifications and actions

VoiceAI Connect disconnects the call after five minutes of user

inactivity. This is configurable, using the parameter userNoInputGiveUpTimeoutMS. In addition, you can configure

a timeout within which input from the user (speech or DTMF) should occur.

If the timeout expires without any user input, VoiceAI Connect can play a prompt (audio or text) to

the user, asking the user to say something.

If there is still no input from the user, VoiceAI Connect can prompt the user again (number of times

to prompt is configurable).

If there is still no input, VoiceAI Connect disconnects the call and can perform a specific activity

such as playing a prompt to the user or transferring the call (to a human agent).

VoiceAI Connect can also send an event message to the bot if there is no user input. The event

indicates how many times the timeout elapsed. For more information, see Receiving no user input notification.

How to use it?

These features are controlled by the VoiceAI Connect Administrator, or dynamically by the bot during the conversation (bot overrides VoiceAI Connect configuration):

|

Parameter |

Type |

Description |

|---|---|---|

|

Number |

Defines the maximum time (in milliseconds) that VoiceAI Connect waits for input from the user. If no input is received when this timeout expires, you can configure VoiceAI Connect to play a textual (see the If the The default is 0 (i.e., feature disabled). Note:

|

|

|

Number |

Defines the maximum time that VoiceAI Connect waits for user input before disconnecting the call. The value can be 0 (no timeout. i.e., call remains connected) or any number from 100. The default is 300000 ms (i.e, 5 minutes). This parameter can be changed by the Administrator and bot. Note: DTMF (any input) is considered as user input (in addition to

user speech) if the The parameter is applicable only to VoiceAI Connect Enterprise (Version 3.14 and later). |

|

|

Number |

Defines the maximum number of allowed timeouts (configured by the The default is 0 (i.e., only one timeout). For more information on the no user input feature, see the Note: If you have configured a prompt to play upon timeout expiry, the timer is triggered only after playing the prompt to the user. |

|

|

String |

Defines the textual prompt to play to the user when no input has been received from the user when the timeout expires (configured by The prompt can be configured in plain text or in Speech Synthesis Markup Language (SSML) format: By default, the parameter is not configured. Plain-text example: {

"name": "LondonTube",

"provider": "my_azure",

"displayName": "London Tube",

"userNoInputTimeoutMS": 5000,

"userNoInputSpeech": "Hi there. Please say something"

}

SSML example: {

"name": "LondonTube",

"provider": "my_azure",

"displayName": "London Tube",

"userNoInputTimeoutMS": 5000,

"userNoInputSpeech": "<speak>This is <say-as interpret-as=\"characters\">SSML</say-as></speak>"

}

For more information on the no user input feature, see the Note:

|

|

|

String |

Defines the URL from where the audio prompt is played to the user when no input has been received from the user when the timeout expires (configured by By default, the parameter is not configured. For more information on the no user input feature, see the Note: If you have also configured to play a textual prompt (see the |

|

|

Boolean |

Enables VoiceAI Connect to send an event message to the bot if there is no user input for the duration configured by the

This parameter is deprecated. The

sendEventsToBot parameter should be used instead.Note:

|

|

|

Number |

Defines a timer (in msec) that starts upon the first hypothesis and stops when there is a recognition. If the timer expires and the user is still talking (could be background noise), it means that the user is talking too much. In such a scenario, VoiceAI Connect stops the speech-to-text process and forces a recognition with whatever text has been accumulated until now from the speech-to-text provider, which it sends to the bot. The default is 0. Note: The feature is applicable only to the following speech-to-text providers: Azure, Google, and Google V2. The parameter is applicable only to VoiceAI Connect Enterprise (Version 3.22 and later). |

Sentiment analysis

Google Dialogflow’s sentiment analysis feature inspects end-user input and tries to determine an end-user's attitude (positive, negative, or neutral). For more information, see sentiment analysis for Dialogflow CX and Dialogflow ES.

VoiceAI Connect Enterprise supports this feature from Version 2.6 and later.

How to use it?

These features are controlled by the VoiceAI Connect Administrator, or dynamically by the bot during the conversation (bot overrides VoiceAI Connect configuration):

|

Parameter |

Type |

Description |

|---|---|---|

|

Boolean |

Enables the sentiment analysis feature.

Note: This parameter is applicable only to Google Dialogflow. |

Profanity filter

Profanity filter provides a few options in dealing with profane words in the transcription.

VoiceAI Connect Enterprise supports this feature only for Azure, Google and Yandex speech-to-text providers, from Version 3.6 and later.

How to use it?

These features are controlled by the VoiceAI Connect Administrator, or dynamically by the bot during the conversation (bot overrides VoiceAI Connect configuration):

|

Parameter |

Type |

Description |

|---|---|---|

|

String |

Defines the profanity filter options as one of the following values:

Note: Google speech-to-text mask replaces all letters with asterisk (*) characters except the first letter. |

Removing speech disfluencies from transcription

Speech disfluencies are breaks or disruptions in the flow of speech (e.g., "mmm"). This feature removes disfluencies from the transcription created by the speech-to-text service.

VoiceAI Connect Enterprise supports this feature only for Azure speech-to-text provider, from Version 3.12 and later.

How to use it?

This feature is controlled by the VoiceAI Connect Administrator, or dynamically by the bot during the conversation (bot overrides VoiceAI Connect configuration):

|

Parameter |

Type |

Description |

|---|---|---|

|

String |

Enables the removal of disfluencies from the transcription for speech-to-text services:

Note: The parameter is applicable only to Azure speech-to-text. VoiceAI Connect Enterprise supports this feature from Version 3.12 and later. |

Configuring audio file format for speech-to-text

You can configure the audio file format (WAV or RAW) for speech-to-text services. If you've enabled the storage of audio recordings (as described in Storing call transcripts and audio recordings), the recordings are stored in the configured format.

How to use it?

This feature is controlled by the VoiceAI Connect Administrator, or dynamically by the bot during the conversation (bot overrides VoiceAI Connect configuration):

|

Parameter |

Type |

Description |

|---|---|---|

|

Boolean |

Defines the format of the audio file:

Note: This parameter is applicable only to the following speech-to-text providers: Azure and AC-STT-API. VoiceAI Connect Enterprise supports this parameter from Version 3.20.5 and later. |

Segmentation silence timeout

Azure segmentation silence timeout adjusts how much non-speech audio is allowed within a phrase that's currently being spoken before that phrase is considered "done." For more information, go to Microsoft documentation.

VoiceAI Connect Enterprise supports this feature only for Azure speech-to-text provider, from Version 3.12 and later.

How to use it?

This feature is controlled by the VoiceAI Connect Administrator, or dynamically by the bot during the conversation (bot overrides VoiceAI Connect configuration):

|

Parameter |

Type |

Description |

|---|---|---|

|

Number |

Defines the segmentation silence timeout. The valid value is 100 to 5000 (in milliseconds). By default, not defined. Note: The parameter is applicable only to Azure speech-to-text. |